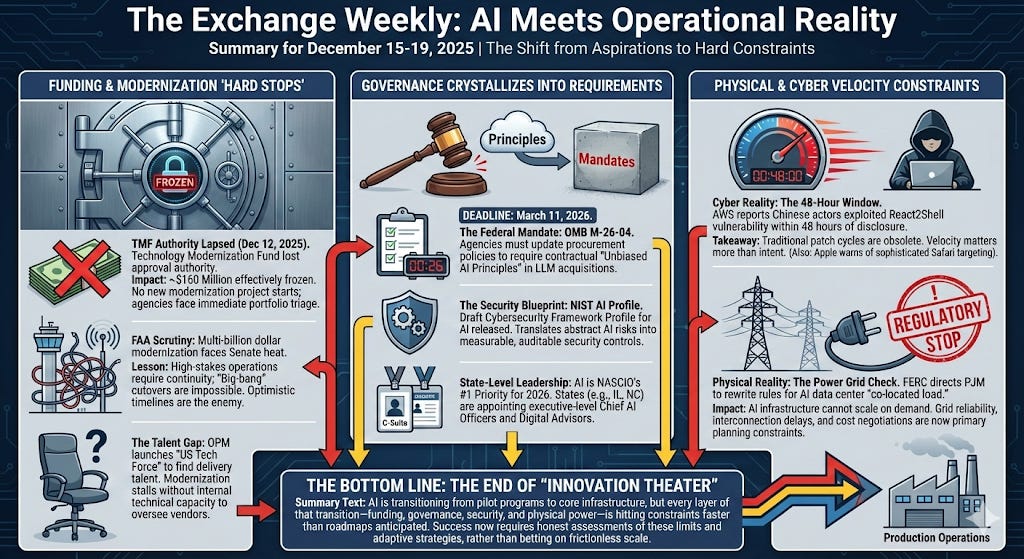

The Exchange Weekly Newsletter

December 15-19, 2025

Executive Summary

This week marked a shift from AI aspirations to operational constraints. The Technology Modernization Fund lost its authority to approve new investments on December 12, freezing federal modernization funding just as agencies face mounting pressure to deliver AI-enabled services under compressed timelines. Congress pushed reauthorization…